How to Get More From Your OSINT Framework

Table of contents

Security teams work with information that is already visible online. OSINT supports that by gathering open source intelligence from public data such as social media activity, code repositories, exposed assets, and basic records that reveal how a digital footprint shifts over time.

Unfortunately, hackers also study the same material. They use it to prepare attacks, tailor phishing, or find weak points that have gone unnoticed. This blog explores how OSINT has grown into a more disciplined practice. The focus is on clearer methods and smarter collection so your team can turn scattered public data into insight that supports faster security decisions (before hackers catch it).

Why OSINT must evolve for modern security operations

Public data has grown to a scale that makes manual monitoring unrealistic. New domains appear, employees update profiles, cloud assets shift, and code is pushed to repositories throughout the day. Each change adds detail to your organisation’s digital footprint, and some of it creates exposure that is easy to overlook.

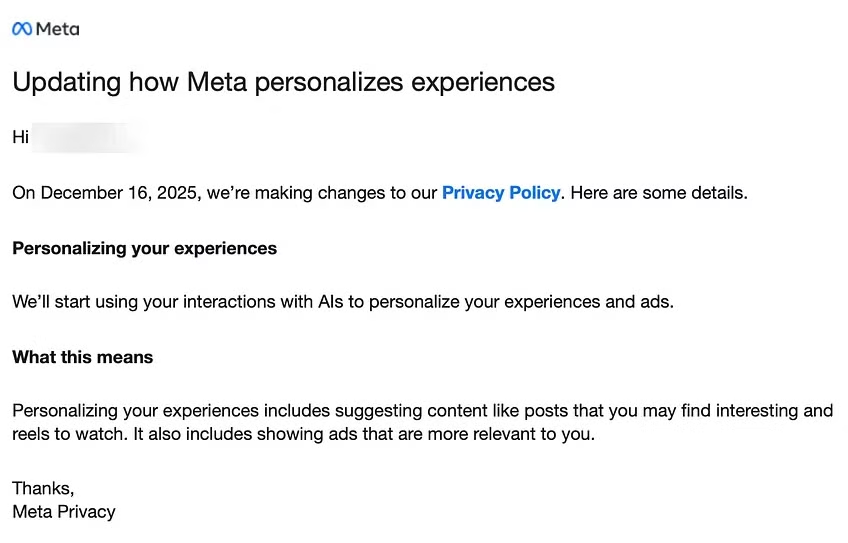

For example, Meta has just announced that AI prompts are going to be used to inform ads. And as The OSINT Newsletter has recently pointed out, “If Meta can access that data, others potentially can too.”

Threat actors depend on this visibility. They scan for misconfigurations, leaked personal data, and infrastructure clues that help them plan their next step. Security teams need OSINT methods that match this pace. Stronger OSINT workflows and smarter collection gives teams time to act before a small exposure turns into an incident.

The core pillars of an advanced OSINT program

A mature OSINT function rests on a small set of practices that guide how information is gathered, assessed, and turned into something a security team can use. These pillars shape the workflow from the first question to the final insight.

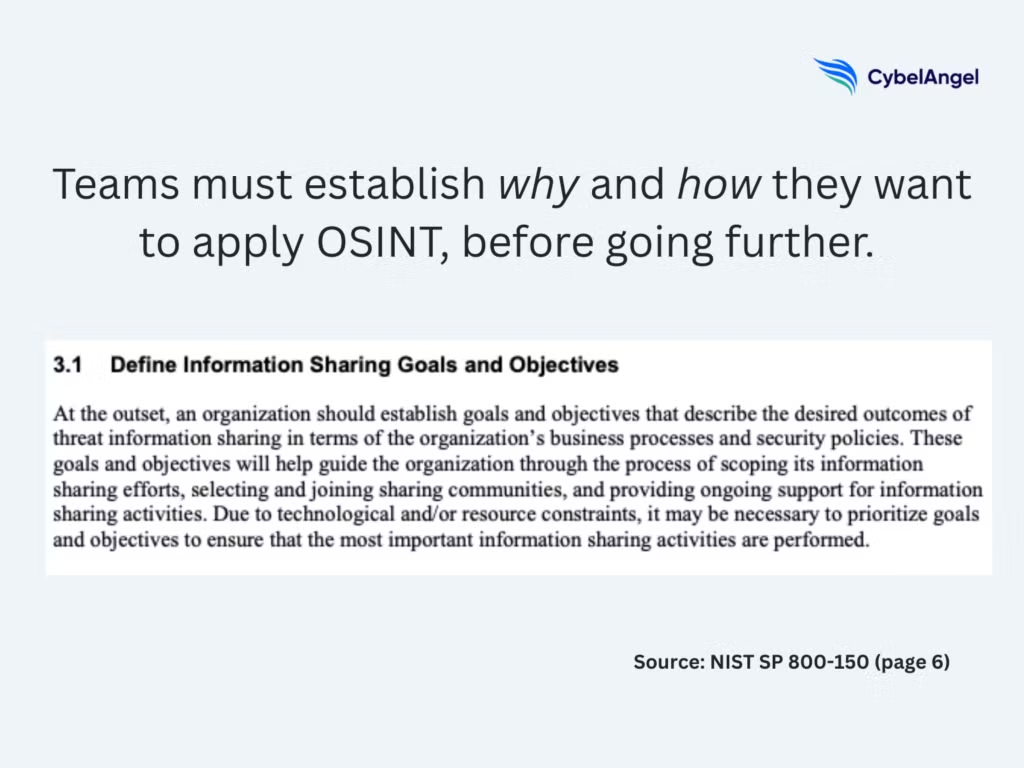

- Collection planning is recommended by NIST SP 800-150 to give analysts a starting point. It defines the target, the scope, and the questions the team needs to answer. This avoids unfocused searches and helps direct effort toward data that has real value for threat intelligence gathering.

- Automated data acquisition keeps pace with the constant movement of publicly available data. Security teams depend on scheduled lookups, API based collection, and scanning tools that surface new domains, leaked records, exposed assets, or shifts in social media activity without manual effort. NATO’s OSINT Capability Q&A describes this as a means to “compensate for limited manpower and to increase sustainability.” MITRE ATT&ACK also outlines how similar security reconnaissance tools will be in use by cybercriminals at the same time.

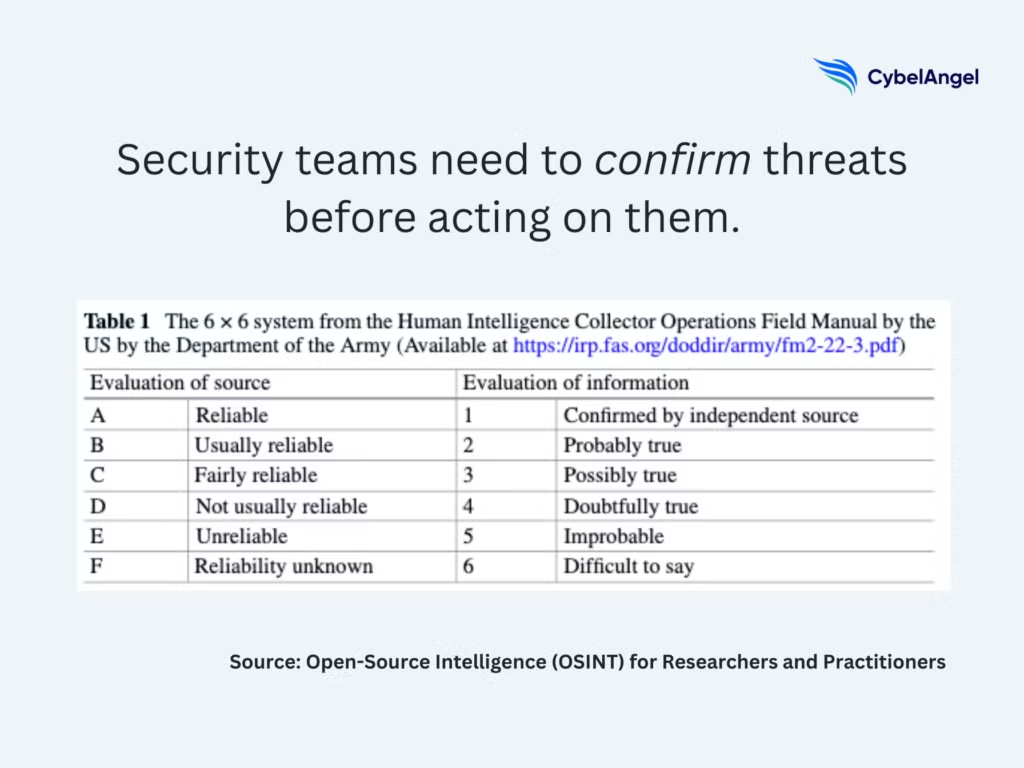

- Source evaluation and validation protect the quality of the investigation. Analysts assess where the data came from, how reliable it is, and whether it can be cross checked against other public sources and search engines. This reduces false signals and limits the risk of acting on incomplete or misleading information. Here is a framework which can be used to assess publicly available sources.

- Correlation and enrichment turn raw findings into context. A single domain, webpage or username may lead to a chain of linked assets, past data breaches, or public code that reveals configuration details. These pivots create a clearer picture of how different pieces of publicly available information connect.

- Actionable intelligence production closes the loop. The team delivers a concise finding that supports a decision. That may involve alerting on a new credential leak, flagging misconfigured cloud storage, or documenting a pattern of behaviour that could precede a cyberattack. IBM describes this form of threat intelligence as a means to “trigger other response actions.“

The next section will explore the methodologies that best reinforce these pillars.

Building stronger OSINT methodologies

NIST SP 800-150 outlines some best practices that apply to OSINT methodologies. It states that a strong OSINT investigation starts with a clear objective. Before collecting anything, decide what question you need to answer and which parts of your organisation or infrastructure fall within scope. This keeps the work focused and limits time spent on signals that will not influence a security decision.

Source reliability comes next. Public data varies widely in accuracy and intent, so treat every source with a degree of caution. Note where you’re gathering information from, how it was discovered, and any uncertainty that may affect its value. A small habit like this reduces false leads and helps analysts stay focused on genuine vulnerabilities.

OSINT intelligence frameworks improve when they follow a repeatable workflow. Document the steps you take, from the initial observation through to the final insight. Capture the tools used, the filters applied, and the reasoning behind each action. Over time, this becomes a pattern your team can refine, automate, and share.

Pivoting is where most investigations gain depth. A single domain can reveal hosting details, IP blocks, social accounts, leaked credentials, or metadata from public files. The key is to move with intention. Record each connection and explain why it matters so another analyst could retrace the path with confidence.

An audit trail supports all of this. Track when data was collected, how it has changed, and where it has been used. It strengthens the quality of your findings, supports peer review, and keeps your team aligned on decision-making.

☑️ Use case

A security team wants to understand how a recent phishing campaign targeted their employees. Instead of collecting screenshots and links in an ad-hoc way, they create a simple workflow that follows OSINT techniques.

They collect data in a single sheet. Each phishing domain is logged with the date, source, screenshot, and any employee reports. The analyst then pivots from each domain to its WHOIS data, hosting provider, SSL records, and any linked IP addresses.

Every step is noted so another team member can repeat it. Within a week, the team has a clear picture of the infrastructure behind the campaign and can alert others before new variants appear.

Expanding your OSINT data sources

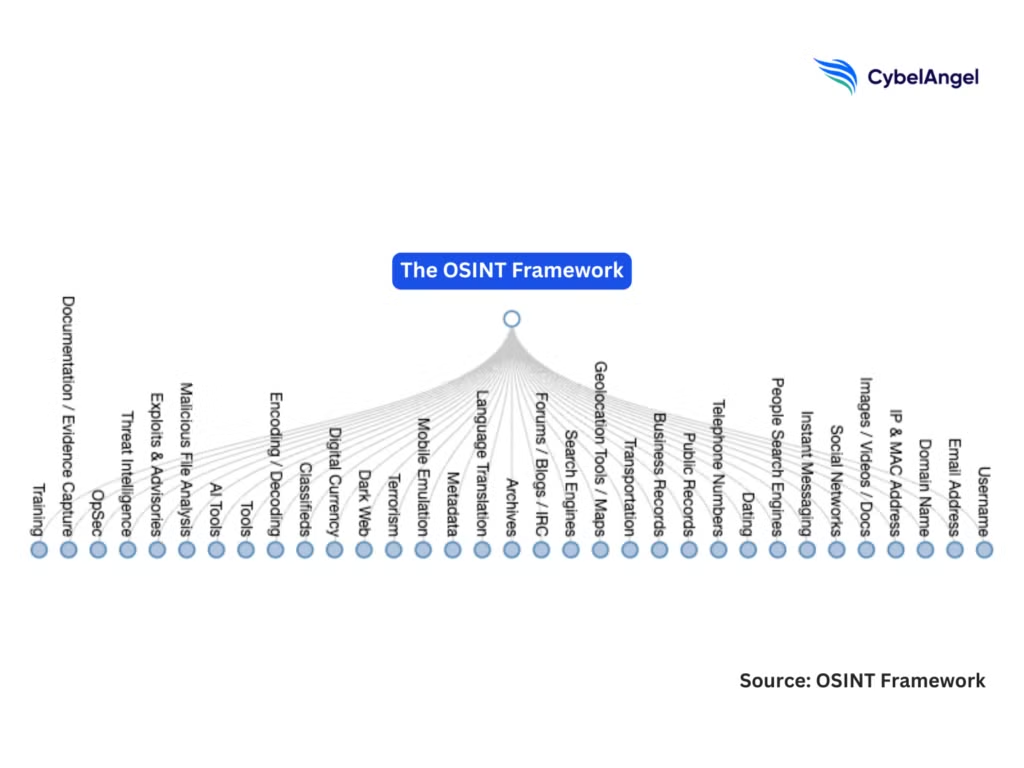

Modern OSINT draws from a wide range of public data for both internal and external attack surface management. Each category brings a different view of your organisation’s digital footprint, so grouping sources by function helps keep the workflow organised. It also makes it easier to decide which sources matter for a particular investigation.

1. Public profiles and social media platforms

Social media profiles reveal how people and organisations present themselves, along with the connections and behaviours that may expose risk.

- Social media activity

- Public posts and comments

- Group memberships

- Follower networks

- Basic metadata and profile changes

2. Technical infrastructure and attack surface data

Infrastructure sources show what is reachable on the open internet and highlight services that may be exposed without intention.

- Shodan

- Censys

- WHOIS and domain records

- SSL transparency logs

- Cloud storage visibility checks

- Exposed services and misconfigurations

3. Code and developer ecosystems

Code platforms often surface credentials, configuration details, and other sensitive information introduced through day to day development.

- Public GitHub commits

- Leaked API keys

- Configuration files

- Exposed credentials

- Package and dependency metadata

4. Forums, dark web spaces, and criminal marketplaces

These spaces provide early signs of targeting, planned activity, or interest in specific assets or industries.

- Breach discussions

- Access listings

- Dark web monitoring

- Malware updates

- Targeting chatter related to specific industries

5. Public records and administrative datasets

Administrative records help build context around ownership, structure, and operational footprint, which can support both attribution and exposure analysis.

- Business filings and registries

- Property and corporate data

- Geospatial datasets

- Government publications

These groups offer a map of where relevant information sits. The next step is to automate parts of this collection so your team can monitor changes in real time, make informed decisions, and focus effort where it matters most.

Automation and real time collection techniques

OSINT work now depends on machine assisted collection. APIs allow continuous monitoring of domains, infrastructure changes, and public code updates so the team sees new signals as they appear.

Cybersecurity analysis tools such as SpiderFoot and Maltego automate the heavy lifting. They handle pivot chains, graph relationships, and recurring lookups that would take hours to repeat by hand.

Automation also supports real time alerting. New domain registrations, leaked personal data, or credentials posted on public forums can trigger immediate notifications. Some teams use machine learning models to filter noisy data or highlight behavioural patterns that might lead to a security incident.

External threat intelligence tools such as CybelAngel add another layer to this workflow. Cybersecurity intelligence automates discovery across a wide range of public and semi public sources and surfaces exposures that would be hard to track manually. Real time alerts highlight leaked data, misconfigured assets, or documents that reveal sensitive information.

All of this reduces manual collection time and lets analysts focus on interpretation rather than extraction.

☑️ Use case

A security team wants to track any public exposure of internal documents. Instead of running manual searches each week, they configure CybelAngel to monitor a set of keywords, file types, and domains linked to the organisation.

Within days, the platform alerts them to a sensitive slide deck stored in an open cloud folder. The analyst reviews the alert, confirms the source, and asks the owner to restrict access.

The team resolves the issue before the file spreads further and updates their process to prevent similar misconfigurations.

Integrating OSINT into security operations’ workflows

Once collected and analysed, OSINT needs to feed into daily security work. Many teams route relevant findings into their SIEM or SOAR platforms so alerts and enrichment happen in one place. This speeds up data analysis and reduces the chance that public signals are missed during an incident.

OSINT sources can also strengthen incident response by adding context from outside the organisation. For third party risk reviews, public data helps validate how a supplier manages its own exposure.

In some cases, OSINT even supports collaboration with law enforcement agencies when a pattern of activity needs formal reporting. Recording these steps within a broader intelligence lifecycle keeps the process consistent and easier to maintain.

If you’re new to integrating OSINT into your workflows, here’s a quick checklist:

- Define which OSINT signals matter: Agree on the types of public data that should trigger action, such as domain changes, leaked credentials, or exposed assets.

- Route findings into existing tooling: Feed relevant OSINT alerts into your SIEM or SOAR so enrichment and triage happen in one place.

- Document how analysts should respond: Create a short playbook for reviewing, validating, and escalating OSINT findings during investigations.

- Use OSINT to support supplier reviews: Add public exposure checks to supply chain risk assessments and onboarding workflows.

- Store evidence with clear provenance: Keep a record of where the data came from, when it was collected, and how it informed the final decision.

Legal and ethical considerations

OSINT work must follow clear boundaries. Analysts need to respect platform terms of service and avoid collecting data that is not intended for public view. Privacy is central. Public information can still contain personal details that require careful handling.

Here is some key legislation that influences OSINT practice:

- GDPR (EU): Sets strict rules on collecting and handling personal data, even when that data is publicly visible. Teams must justify purpose, minimise use, and apply safeguards.

- CCPA/ CPRA (California):Regulates how organisations gather and process personal information about California residents, including data found through open sources.

- Computer Fraud and Abuse Act (US): Limits unauthorised access to systems or protected data. OSINT remains lawful only when collection stays within public, intentionally available information.

- Platform-specific terms and regional privacy laws: Many platforms restrict automated scraping or bulk data collection. Local privacy frameworks may also affect how long data can be stored or how it can be shared.

Operational security also matters. Teams should avoid techniques that expose their own identity or create unnecessary risk during collection. Finally, public data can be incomplete or misleading, so analysts need to avoid misattribution and document any uncertainty in their findings.

☑️ Use case

An analyst is investigating a suspicious domain that appears linked to a phishing campaign.

Instead of visiting it from a work machine, they route the request through a controlled environment with a clean browser profile and no organisational identifiers. They also avoid interacting with any login pages or forms.

By keeping their own footprint minimal and separating investigation systems from corporate networks, the team reduces the chance of tipping off the attacker or exposing internal infrastructure during collection.

How CybelAngel supports advanced OSINT work

Strong OSINT programs rely on steady visibility across public and semi public sources.

CybelAngel helps security teams maintain that visibility by automating discovery, monitoring for exposure, and highlighting signals that may need action.

The platform surfaces leaked data, open cloud folders, exposed services, and other issues that would be hard to track manually. Analysts can then review each finding with context and feed it into their existing workflows.

This gives the team more time to focus on judgement and reduces the chance that an important change slips past unnoticed.

Conclusion

OSINT plays a steady role in helping security teams understand what is happening across their public footprint. When methods are clear and collection is consistent, those signals become far more useful.

CybelAngel helps by spotting exposures that are easy to miss and giving analysts the time to focus on interpretation rather than constant searching. With this support in place, OSINT becomes a dependable part of everyday security decisions.