The Hidden Consequences of Security Tool Sprawl and SOC Alert Fatigue

Table of contents

SOC teams work at the speed of their telemetry. And when those signals are steady and the tooling is coherent, analysts can work smoothly. But when the environment is fragmented, everything slows down. Investigation paths stretch out, simple alerts become multi-step puzzles, and high-risk behavior hides inside the noise.

Tool sprawl accelerates that fragmentation. Each new product adds another layer of cognitive effort. The workload rises faster than analysts can adjust, and routine triage becomes a grind of duplicated alerts and conflicting dashboards. Let’s unpack the dangers associated with security tool sprawl and how to fix them.

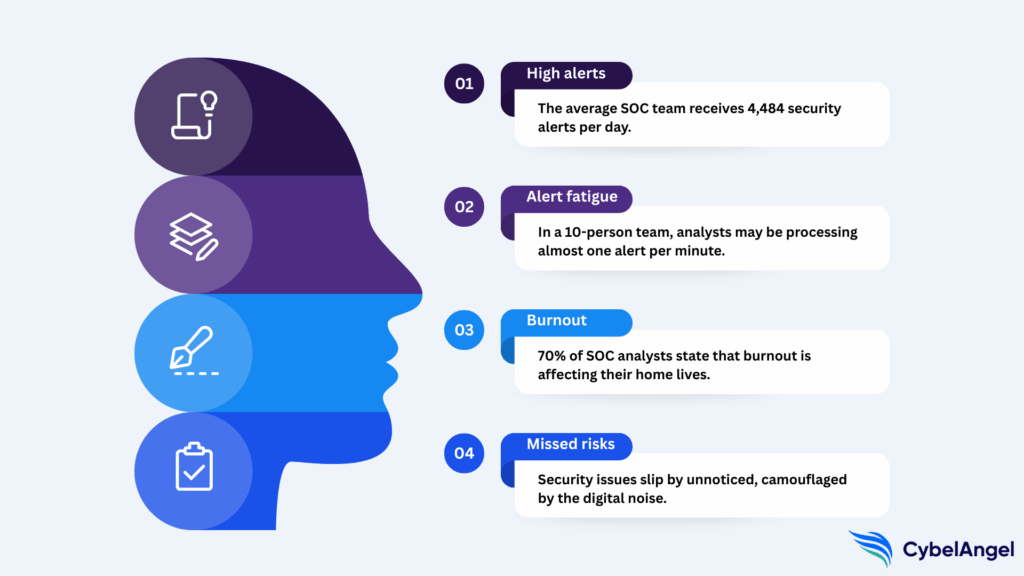

The operational load: How many alerts SOC analysts face daily

SOC teams are handling an average of 4,484 security alerts per day, with other sources suggesting this can reach tens of thousands for some teams. They end up ignoring 67% of these, due to false positives and alert fatigue. However, IBM reports that 71% of these analysts do believe their organization has probably already been ‘compromised without their knowledge.’

Alert fatigue sets in when analysts face more alerts than they can realistically review. Signals arrive faster than they can investigate them, and a large share of those alerts lack context or repeat detections the team has already triaged. Over time, the constant volume reduces focus and makes it harder to separate meaningful vulnerabilities from background noise.

This pressure builds into burnout, which is thought to affect around 70% of SOC analysts. Team members spend entire shifts clearing low-value alerts while important investigative work waits. The mental load grows heavier as the queue refills, and the work becomes a cycle of reaction rather than analysis. It doesn’t help that teams often have to run shifts across a full 24-hour cycle. Fatigue slows decision-making, increases errors, and makes it harder to maintain consistent judgement throughout a shift.

In this state, security risks slip through unnoticed. High-fidelity alerts often resemble the noise until they are enriched or correlated, and a fatigued IT team is more likely to overlook them. When early indicators blend into a crowded queue, attackers gain time in the environment, MTTD increases, and incidents grow more severe before anyone realises what has happened.

How many security alerts does an average SOC analyst review daily? And how much of that is noise?

According to IBM, SOC teams are getting 4,484 alerts per day, and 67% of these are classified as noise. So in a team of 10 people, the average SOC analyst could be processing almost 450 alerts per day. For an 8-hour shift, this could mean processing almost one alert per minute.

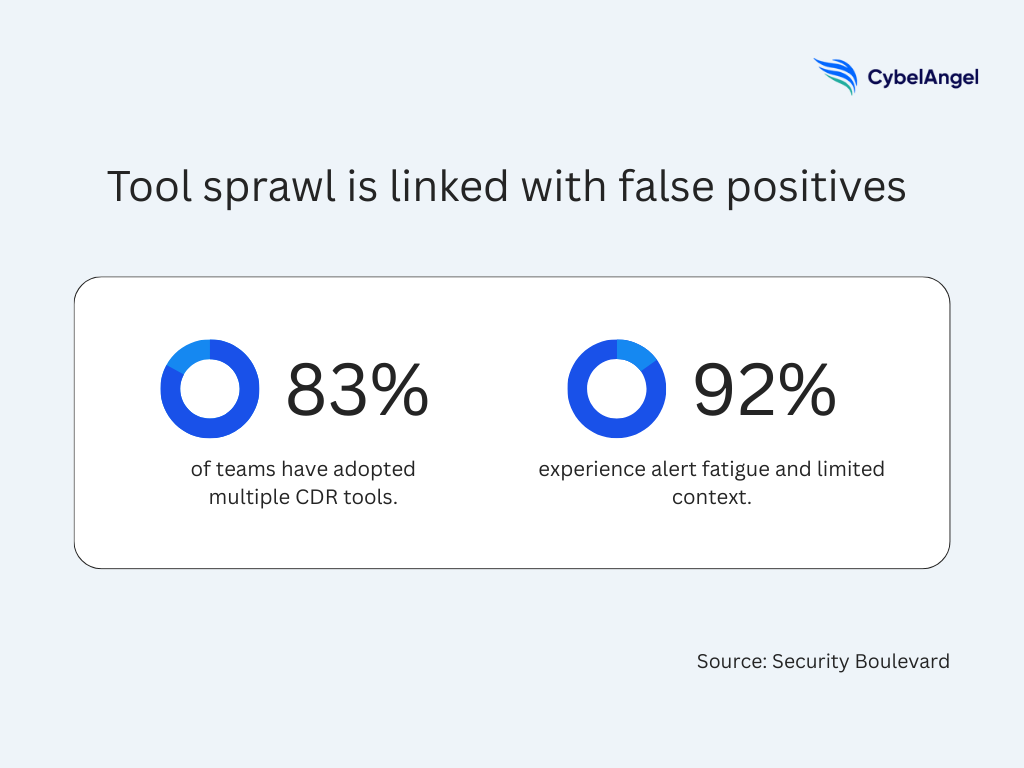

The direct link between tool sprawl and false positives

Tool sprawl increases false positives because every product brings its own detection logic, thresholds, and interpretation of the same underlying activity. And it all contributes to SOC overload.

Here’s how it happens:

- Several tools watch the same telemetry

- They each flag this behavior independently

- Duplicate or conflicting alerts are created

Small integration gaps widen the problem. One event can appear as a cascade of separate detections when tools fail to correlate or enrich the signal before sending it to the SOC queue.

Different teams or suppliers also classify behaviours in different ways, so one tool may treat an action as suspicious while another treats it as routine. Analysts then spend time reconciling these verdicts rather than investigating what actually happened.

As the number of tools grows, the total surface area where false positives can originate expands with it.

What is the direct correlation between security tool sprawl and an increase in false positives?

A recent study found that while 83% of security teams had adopted multiple cloud detection and response tools (CDR), 92% of them also report struggling with alert fatigue and limited context. This will inevitably create false positives security issues along the way.

Security silos and the failure to correlate external threats

Security silos break the connection between what happens inside the environment and what unfolds outside it.

Internal monitoring tools focus on local signals, but they rarely connect it with external intelligence in a structured or timely way. All too often with different tools, the systems that should talk to each other never do.

This separation leaves high-value external signals stranded, and opens up the attack surface. Here’s an example of how it could unfold:

- An impersonating domain is detected by an external platform

- The intelligence often sits in a separate dashboard with no integration to other existing tools

- Internal detections then fire without the enrichment that would explain their relevance

- Analysts treat them as routine rather than indicators of compromise in their ecosystem

This is how critical correlations never happen. If leaked credentials cannot be linked to a spike in authentication failures, or if an exposed asset cannot be tied to inbound scanning events, then the SOC loses the full picture of the intrusion chain.

Ultimately, data silos block context. And without context, the signals that matter most look no different from the noise.

How do security silos prevent correlation of internal alerts with critical external threats (e.g., a dark web credential leak)?

Security silos keep internal alerts and external intelligence in separate systems, so the signals never meet. A dark web credential leak may sit in one dashboard while authentication anomalies appear in another, with no mechanism to tie them together. Without that correlation, analysts lose the context that would reveal the severity of the threat.

MTTD degradation and alert fatigue

Mean time to detect (MTTD) refers to the time taken to respond to a threat. High MTTD emerges when analysts no longer have the capacity to process signals with the attention they deserve.

The more tools a SOC relies on, the longer the investigation path becomes. Every switch between dashboards adds friction, and every duplicated alert forces analysts to re-verify details they have already checked.

Meanwhile, alert fatigue from various tools can lead to slow reactions. The flood of notifications creates a literal bottleneck. Analysts move through queues quickly to keep up with volume, which reduces the time spent examining subtle patterns or low-severity anomalies that often mark the beginning of an intrusion. Early indicators blend in, and detection happens later in the attack cycle, when the adversary has already gained ground.

Why is high Mean Time to Detect (MTTD) a direct consequence of alert fatigue?

High MTTD is a direct outcome of alert fatigue because overloaded analysts miss, delay, or down-rank early signals. The extra steps created by tool sprawl then push investigations deeper into the attack timeline.

The economic cost of missed alerts

Missed external alerts carry a disproportionate financial impact. When a high-fidelity signal (such as leaked credentials or an exposed asset) goes unnoticed, the intrusion is usually discovered later in the attack cycle, when the adversary has already escalated access.

Google Cloud Security’s M-Trends 2025 report shows that attackers can remain inside environments for a median of 11 days before detection, giving them time to move laterally, stage data, and prepare for impact.

A missed alert shifts the timeline into the most expensive phases of an incident. At that point, organisations absorb full incident-response hours, operational disruption, and the downstream costs tied to exfiltration, extortion, or ransomware deployment. Legal exposure and reputational harm follow, especially when stolen data appears on criminal marketplaces.

What is the economic cost of a missed, high-fidelity external alert caused by alert fatigue?

The average cost of a data breach in 2025 is an eye-watering $4.4 million, even after a modest year-over-year decline. A missed high-fidelity external alert can push an organisation straight into that loss range, because detection happens late in the attack.

Why automation alone isn’t the answer

Many experts cite automation and AI as the solution.

But automation only works when the signals feeding it are clean and consistent. Otherwise, it just repeats the same mistakes the analysts are already struggling with. The system accelerates the workflow, but it accelerates the noise as well.

Fragmented toolsets make this worse. When automation pulls from disconnected products and uneven datasets, it cannot build a reliable picture of what is actually happening in the environment.

Some teams have successfully implemented AI to reduce false positives by 54%. AI-assisted correlation helps reduce some of the manual load, yet again, it still depends on coherent inputs.

You can only automate effectively when the data behind it is trustworthy. And that begins with the quality of the external intelligence feeding the system…

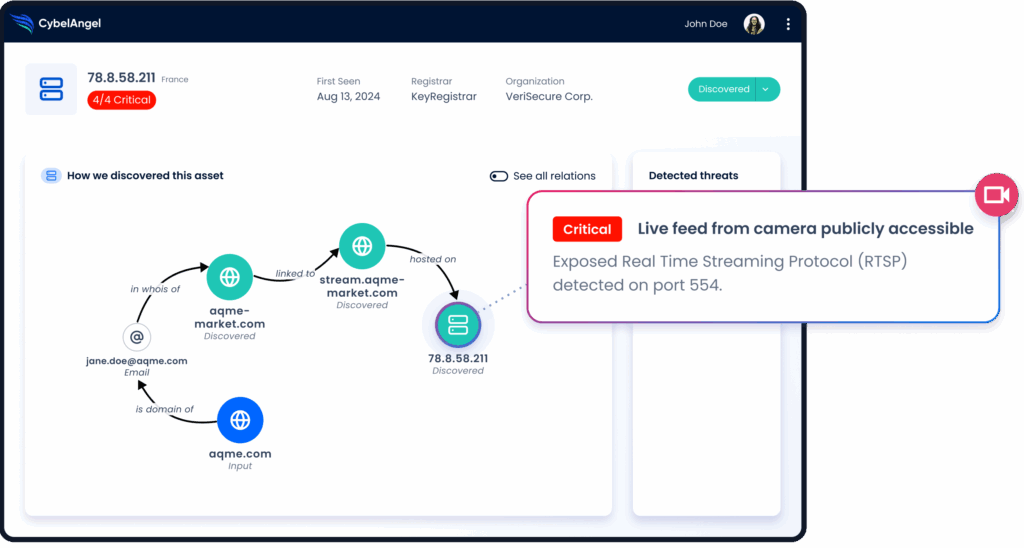

CybelAngel: Cutting through the noise

A unified external visibility platform gives analysts clear, high-value signals instead of raw noise. CybelAngel identifies exposed assets, leaked credentials, and third-party risks, then delivers each finding with the context needed to understand why it matters.

This reduces triage time. The alert arrives already explained, including where it came from, what it affects, and what action is needed, so analysts can move straight to verification and response.

Figure 3: Graphic showing data breach prevention and troubleshooting with CybelAngel. (Source: CybelAngel)

How does a unified external visibility platform (like CybelAngel) provide high-fidelity, contextualised alerts that cut through the noise?

A unified platform like CybelAngel cuts through the noise by delivering complete, contextualised external alerts that link exposure, severity, and remediation in one place, allowing analysts to act quickly without wading through low-value signals.

Use cases where tool consolidation restores SOC performance

Cybersecurity platform consolidation helps analysts connect signals that would otherwise stay isolated.

For example, when leaked credentials detected by external intelligence appear alongside unusual privilege use, the link becomes immediate and actionable. Analysts no longer waste time checking multiple dashboards before treating it as a real threat.

The same applies to exposed assets. If an external scan reveals an unprotected service and the SOC sees inbound exploitation attempts soon after, consolidation pulls those events into a single narrative. The team can respond in real-time before the intrusion escalates.

Third-party compromises benefit as well. External alerts about a supplier’s breach can flow directly into internal monitoring, allowing response actions before the threat reaches the organisation’s own environment. Consolidation creates a straight path from signal to action, without waiting for cross-tool verification.

Designing an observability platform for the SOC

An observability model for SOCs follows a simple progression:

- Telemetry

- Enrichment

- Correlation

- Action

Each stage depends on clean inputs and a clear workflow. When too many redundant tools fragment that path, the SOC loses precision.

Fewer tools improve functionality, and reduce integration challenges. Analysts move through fewer verification steps, pivot less between interfaces, and see correlations form earlier in the investigation. Decision-making becomes faster because the evidence is already aligned, rather than scattered across disconnected products and inefficiencies.

The result is a platform that supports the way SOCs think: direct, context-rich, and built for rapid assessment.

Wrapping up

So, where do SOCs go next? IBM’s research links large toolsets to slower detection, and M-Trends shows attackers moving quickly across exposed surfaces. Consolidation helps by reducing friction and giving analysts a clearer view of what matters.

SOCs are shifting toward environments where fewer, more reliable tools share context instead of competing for it. This makes investigations faster and streamlines the overall workload.

Ready to reduce alert fatigue and surface the signals that matter? CybelAngel offers unified external visibility that adds context and clarity to your security posture. Book a demo to see how.