AI-generated Fake News Sites: How Artificial Intelligence is Weaponizing SEO for Profit

Table of contents

Artificial intelligence is now being used to generate thousands of fake news websites that look credible and rank highly on Google. This SEO-driven model creates serious risks, including identity theft, misuse of user data, increased potential for spear-phishing and social engineering, and the spread of misleading or false information that manipulates individuals.

Generative artificial intelligence is disrupting the codes and practices of information technology. The rapid rise and proliferation of new tools and methods has led to the possibility of semi-automation, or indeed the complete automation of online content generation. The speed of execution and multi-tasking capabilities that define it significantly increase the potential for harm by malicious actors who exploit these technologies.

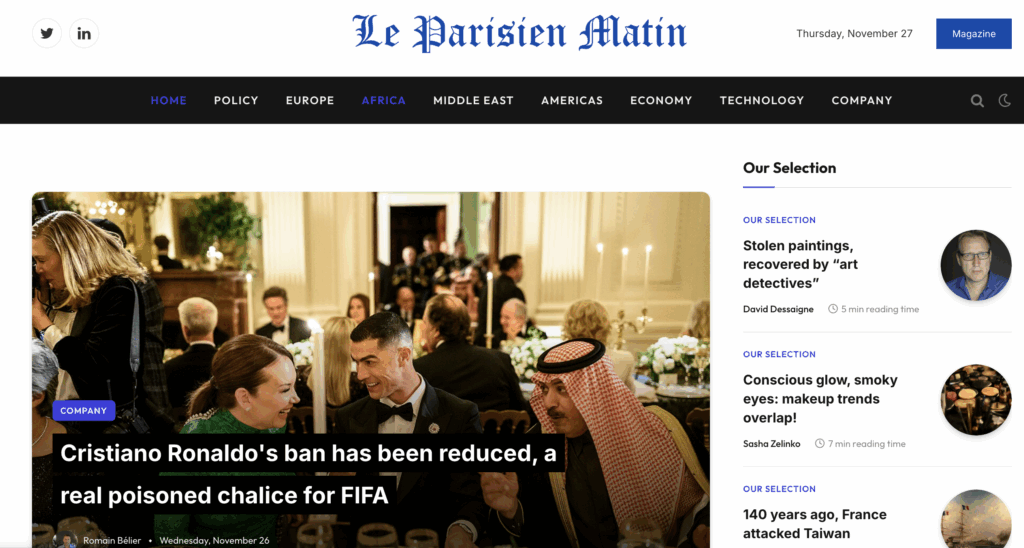

“leparisienmatin.fr,” a website that partially mimics the title of a well-known newspaper, is a striking example that we at CybelAngel have detected.

Largely generated by AI, this website, during our investigation, presents the characteristics of a standard news platform, while concealing objectives of a nature other than informational.

Our investigation deciphers how artificial intelligence, used as a means of producing online content, ultimately aims to manipulate Google’s algorithms in order to promote third-party companies for nefarious purposes.

This practice reveals the development of a complex SEO strategy based on intricate and organized netlinking.

However, beyond this practice prohibited by Google, numerous harmful effects exist, such as:

- Brand impersonation

- Potential media misinformation

- Identity theft of public figures

- Resale of personal data from internet users and security risks

1. The explosion of AI-generated fake news networks

The problem extends far beyond a single fraudulent website. French investigative journalist Jean-Marc Manach uncovered a sprawling ecosystem of over 4,000 AI-generated news websites operating primarily in French, with at least 100 already appearing in English. What began as 70 suspicious sites in early 2024 has metastasized into thousands, representing what Manach describes as a “Sisyphean task” to track and document.

These sites operate with a simple but effective business model: generate massive volumes of content using AI tools like ChatGPT or similar language models, optimize that content for search engines, and harvest advertising revenue when users click through from Google Discover or search results. Some operators have reportedly become millionaires through this scheme.

The anatomy of an AI content farm

The typical AI-generated news site follows a predictable pattern:

- Content generation: Articles are either plagiarized from legitimate news sources and rewritten by AI, or entirely fabricated by language models. The content often contains telltale signs of AI generation—awkward phrasing, factual inconsistencies, and the distinctive “hallucinations” that AI systems produce when inventing information.

- Visual elements: Images are frequently AI-generated, with obvious artifacts that trained eyes can spot. Stock photos may be used without proper attribution, or synthetic images created by tools like DALL-E or Midjourney accompany fabricated stories.

- Author profiles: The bylines belong to victims of identity theft, non-existent journalists with no LinkedIn profiles, no previous publication history, and no digital footprint beyond the fake site itself. Some sites use AI-generated headshots for these phantom authors.

- Domain strategy: Operators often register domains that mimic legitimate news outlets, using slight variations in spelling or adding regional indicators to create an illusion of credibility. The “leparisienmatin.fr” example demonstrates this tactic—borrowing authority from “Le Parisien,” a genuine French newspaper.

How Google Discover became a cash machine

A critical turning point in this phenomenon occurred when operators discovered that Google Discover—the personalized news feed on Android devices and Google apps, was particularly vulnerable to AI-generated content. Unlike traditional search, which requires users to actively query, Discover pushes content to users based on their browsing history and interests.

For publishers, appearing in Google Discover can generate thousands of dollars per day in advertising revenue through Google AdSense. The algorithm prioritizes engagement signals like click-through rates, which sensationalized and clickbait headlines excel at generating—regardless of content quality or veracity.

YouTube tutorials emerged in 2022-2024 teaching SEO professionals “how to hack Google Discover” using generative AI. These guides spread rapidly through French-speaking SEO communities, democratizing the techniques and creating an arms race of AI-generated content farms.

The misinformation amplification effect

The consequences extend beyond mere spam. These AI-generated sites have successfully pushed completely false stories into Google’s recommendation systems to drive popularity and engagement. These include:

- Banknotes would cease to exist in France from October 2025

- Grandparents would no longer be able to transfer money to grandchildren

- The French government would seize money from savings accounts to finance the war in Ukraine

- A 25,000-year-old pyramid had been discovered under a mountain

- A giant predator resembling an enormous dodo was found beneath Antarctic ice

More insidiously, some of these fabricated stories have been picked up and amplified by human journalists at other outlets, who failed to verify the information before republishing. This creates a misinformation multiplier effect, where AI-generated falsehoods gain legitimacy through citation by legitimate media.

NewsGuard’s AI Tracking Center has documented hundreds of these “unreliable AI-generated news websites” across multiple languages, demonstrating that this is a global phenomenon affecting information integrity worldwide.

2. The dark art of netlinking and SEO manipulation

At the heart of these AI content farms lies a sophisticated understanding of search engine optimization, specifically the practice of “netlinking”—building networks of hyperlinks to artificially inflate a website’s authority in Google’s ranking algorithm.

Understanding Google’s link-based ranking system

Google’s search algorithm still relies heavily on backlinks as a ranking signal. When one website links to another, it’s treated as a “vote of confidence”—evidence that the linked content has value. Sites with many high-quality backlinks from authoritative domains tend to rank higher in search results.

This fundamental mechanism has created a lucrative black market for link manipulation. According to Google’s spam policies, “link spam” includes:

- Buying or selling links for ranking purposes

- Excessive link exchanges (“Link to me and I’ll link to you”)

- Using automated programs to create links

- Large-scale guest posting with keyword-rich anchor text

- Creating low-quality directory or bookmark site links

All of these practices violate Google’s spam policies, yet they persist because they work, at least temporarily, until detection systems catch up.

The netlinking scheme behind AI news sites

The AI-generated news sites employ several netlinking strategies simultaneously:

- Internal link networks: Operators create multiple sites that cross-link to each other, building a web of artificial authority. A single operator might control dozens of domains, all referencing each other to create the illusion of independent editorial endorsement.

- Third-party promotion: The primary business model involves selling backlinks to legitimate companies. A business pays the AI site operator to publish an article mentioning their brand with optimized anchor text linking to their website. Because the AI-generated site may have accumulated some authority through volume and Google Discover appearances, these backlinks pass valuable “link equity” to the paying client.

- Parasite SEO: Some operators practice “site reputation abuse”—publishing third-party commercial content on domains that have established ranking signals. For example, an AI-generated site might start as general news content to build authority, then gradually introduce paid promotional content for casinos, payday loans, or other high-margin products.

- Expired domain hijacking: Operators purchase expired domain names that previously belonged to legitimate organizations—government agencies, non-profits, educational institutions—and repurpose them to host commercial or affiliate content. The domain retains its historical authority in Google’s system, allowing the new content to rank higher than it otherwise would.

These tactics mirror the psychological manipulation techniques that cybercriminals use in social engineering attacks. Both rely on exploiting trust mechanisms—whether human psychology or algorithmic behavior—to achieve malicious objectives.

Why does Google struggle to combat this?

Despite having clear policies against these practices, Google faces significant challenges in enforcement. With 4,000+ sites identified just in French, and likely tens of thousands globally, manual review is impossible. Automated detection systems must identify patterns, but sophisticated operators constantly evolve their techniques to evade detection. As language models become more sophisticated, AI-generated content becomes harder to distinguish from human-written text, the obvious errors and awkward phrasing that once signaled AI generation are disappearing.

Not all AI-assisted content is spam either. Many legitimate publishers use AI tools to help with research, drafting, or editing, so Google must differentiate between helpful AI assistance and manipulative AI generation, a rather nuanced distinction that algorithms struggle with. The economic incentives driving these operations are enormous. With advertising revenue reaching thousands of dollars daily for successful sites, operators have strong motivation to continue despite risks. Even if individual sites get penalized, operators simply launch new domains and repeat the process.

3. The broader consequences: Beyond SEO gaming

While the immediate goal of these AI-generated sites is financial gain through advertising and backlink sales, the collateral damage extends into several concerning areas.

Brand impersonation and reputation damage

When a site like “leparisienmatin.fr” mimics “Le Parisien,” it commits a form of brand impersonation that can damage the legitimate publication’s reputation. Readers who encounter false information on the fake site may associate that misinformation with the real brand, eroding trust in genuine journalism.

This tactic mirrors techniques used in phishing and social engineering attacks, where threat actors leverage brand recognition to deceive victims. The psychological principle is identical: people trust familiar names and are less likely to scrutinize content that appears to come from a known source.

Just as modern computer viruses exploit technical vulnerabilities in systems, these fake news sites exploit cognitive vulnerabilities in how humans process information and trust brands.

Identity theft of public figures

Many AI-generated news sites fabricate quotes from real politicians, business leaders, or celebrities to add credibility to their content. These phantom endorsements can mislead readers and potentially harm the reputation of the individuals whose identities are being exploited.

In some cases, AI-generated images of public figures accompany these fake articles, creating visual “evidence” of statements or events that never occurred. As deepfake technology improves, this problem will intensify, making it increasingly difficult for readers to distinguish authentic content from fabrication.

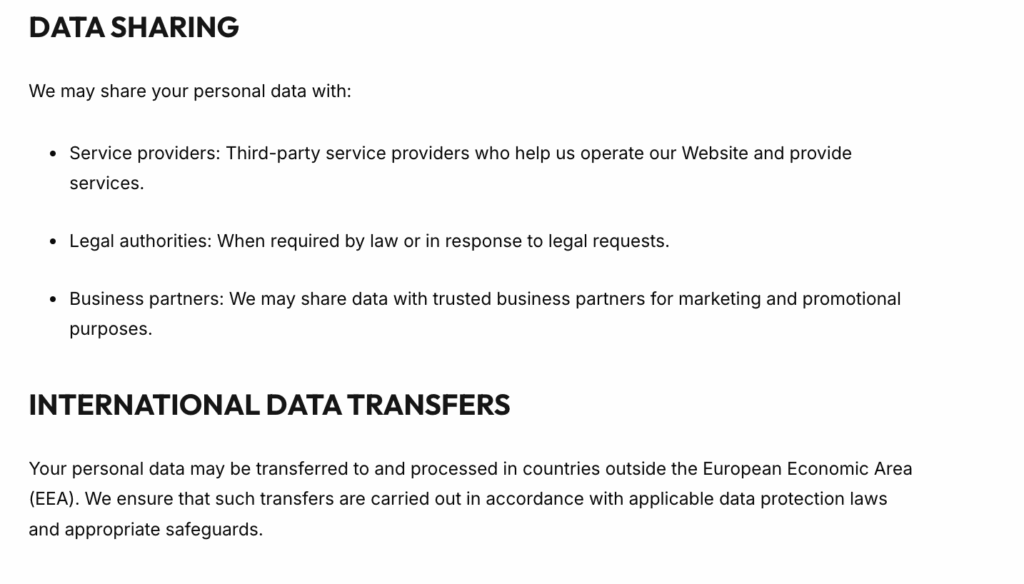

Data harvesting and privacy risks

Behind the advertising revenue model lies another potential threat: data collection. Many of these AI-generated sites employ aggressive tracking technologies to harvest user data—browsing behavior, device information, location data, and more.

This data can be:

- Sold to data brokers: User profiles are valuable commodities in the digital advertising ecosystem

- Used for targeted phishing: Understanding a user’s interests and browsing patterns helps craft more convincing phishing attempts

- Exploited for fraud: Financial information or login credentials captured through malicious scripts embedded in the sites

Some sites may also serve malware or unwanted software through deceptive download buttons or fake security warnings—tactics that require comprehensive protection strategies to defend against effectively.

4. Detection and defense: Protecting your organization

For businesses and individuals concerned about these threats, several defensive measures can help.

Identifying AI-generated fake news sites

Jean-Marc Manach and his collaborators developed a browser extension that flags sites in their database of AI-generated content. While this tool covers thousands of sites, new ones emerge constantly, so vigilance remains essential.

Key indicators to watch for include:

- Non-existent authors: Search for the byline on LinkedIn, Twitter, or other professional networks. Phantom journalists leave no digital footprint.

- Generic or AI-generated images: Look for visual artifacts common in AI-generated imagery—unusual textures, distorted faces, impossible lighting.

- Domain mimicry: Be suspicious of sites with names that closely resemble legitimate publications but with slight variations.

- Sensationalized headlines: AI content farms rely on clickbait to drive traffic. Extraordinary claims without proper sourcing are red flags.

- Lack of transparency: Legitimate news organizations provide clear information about ownership, editorial standards, and contact methods. Fake sites typically omit these details.

Protecting your brand from netlinking schemes

If your organization discovers that AI-generated sites are linking to you as part of a paid netlinking scheme, whether you participated knowingly or were targeted without consent you should take action.

Here is what we recommend you do.

Start by auditing your backlink profile using tools like Google Search Console, Ahrefs, or SEMrush to identify suspicious patterns. Use Google’s Disavow Tool to tell Google to ignore harmful backlinks that could trigger penalties. Then contact site operators directly to request link removal and document all attempts.

You should also set up Google Alerts for your brand name combined with terms like “review,” “scam,” or “complaint” to catch impersonation attempts early. Also you should consider brand monitoring services, like the ones we offer at CybelAngel, that alert you when similar domains are registered or your brand appears on suspicious sites.

Cybersecurity best practices to keep in mind

The same principles that protect against malware and phishing apply to AI-generated content threats. Much like stealth malware such as Pikabot operates undetected, these fake news sites blend into the legitimate information landscape.

- User training: Educate employees about fake news sites and how to verify information sources before sharing or acting on content.

- Email filtering: Implement robust spam filters that can detect links to known fake news domains.

- Endpoint protection: Ensure all devices have updated antivirus software to block malware that may be distributed through malicious sites.

- Network monitoring: Track outbound connections to identify employees accessing suspicious domains.

- Incident response planning: Develop protocols for responding when your brand is impersonated or your organization is targeted by misinformation campaigns.

Wrapping up

The emergence of thousands of AI-generated fake news sites represents a new frontier in online manipulation. By combining the content generation capabilities of large language models with sophisticated SEO tactics like netlinking schemes, operators have created a profitable but deeply harmful business model.

The consequences extend beyond simple spam. These sites erode information integrity, enable brand impersonation, facilitate data harvesting, and undermine public trust in digital media. As AI technology continues to advance, the challenge will only intensify.

CybelAngel provides comprehensive external attack surface monitoring that detects brand impersonation, fake domains, and data exposure across the open, deep, and dark web—helping organizations identify and respond to AI-generated threats before they cause damage.