Cybersecurity Without Machine Learning? Think Again.

Table of contents

When it comes to sensitive data leak, time is of the essence. It doesn’t take long for a leak to turn into a data breach. A few weeks ago, Comparitech’s security research team set up a honeypot simulating a database on an ElasticSearch instance, and put fake user data inside of it. The first attack came less than 9 hours after deployment. (Source: Unsecured databases attacked 18 times per day by hackers, Comparitech) In order to beat attackers, you can either compete on equal grounds and use an internet-of-things search engine like Shodan.io or BinaryEdge, via a combination of random manual searches and Python scripts. Or you use Machine Learning. According to Ponemon, Machine Learning’s greatest benefit is the increase in the speed of analyzing threats. Because it reduces the time to respond to cyber exploits, organizations can potentially save an average of more than $2.5 million in operating costs. (Source: The Value of Artificial Intelligence in Cybersecurity, Ponemon/IBM Security)

Benefits of Machine Learning Models

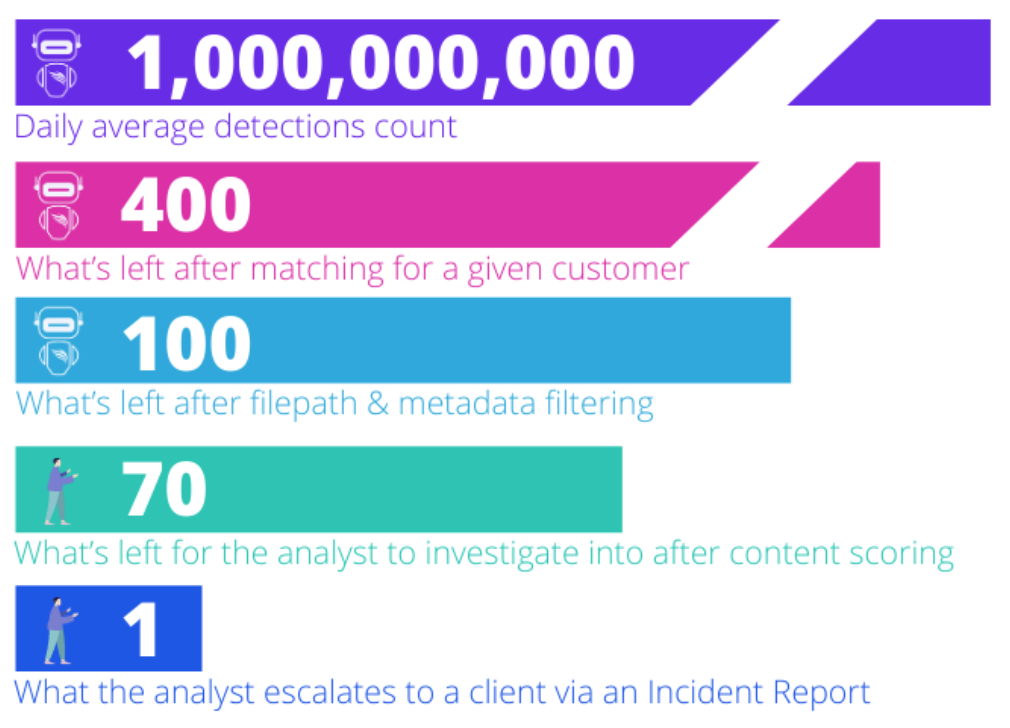

When every second counts, Machine Learning models give you a headstart in a critical race against malicious actors. That’s why our Data Science team creates predictive models that speed up the first alert triage process. In the blink of an eye, billions of detections are automatically discarded before they can reach our cyber analyst’s investigation platform. Here’s how Machine Learning comes into play.

Building Machine Learning Models for Data Leak Detection

Through iterative optimization, Data Scientists build mathematical functions that will allow algorithms to behave like a cyber-analyst would and take the same decision as the human when presented with the same issue. Building a Machine Learning model start with collecting data about :

- The information the model will have to understand (aka the input)

- The decision the model will have to predict (aka the output)

- The correct decision a human would have made (aka the target)

The more data you have, the most accurate the Machine Learning model will be. Once data is collected, it is separated between a training set, and a testing set. Data from the training set are used to create a model where the algorithm makes the same decision as our analyst. During the process, you can use Feature Engineering to identify common characteristics between discarded detections. It increases the predictive power of machine learning algorithms. The model has then to be evaluated on the testing set. This evaluation is done in the most thorough fashion possible to avoid bad surprises when it comes to the generalization to the production. Data Scientists iteratively transform the model until it performs well against the training and testing data. At the end of the process, the algorithm is able to detect real data leaks amongst the billions of data shared every day on the internet. It’s like automatically finding needles in a haystack.

Machine Learning, the CybelAngel’s way

When our scanning tools detect a publicly-accessible internet-connected device matching with a customer’s keyword, they create what we call a detection. Most of the time, a detection is a true negative: for example, the asset doesn’t concern our client. We use all the detections, even the true negatives, to create and train our Machine Learning models. Over time, our unique historical dataset has reached 5,000 billions records. Learn more about our Content Scoring Machine Learning model The larger the dataset, the more accurate the predictions, and the more robust the model. The unique size of our dataset also allows us to be versatile: we can customize sub-datasets for specific training sequences, based on use cases like the origin of the leak, and build very precise models. Training data is representative of all our clients, use cases and alert types found in real life, so that the algorithm will be ready to score when such examples appear.  Every day, only 70 detections out of a billion are pushed to our Analyst team for further investigation. Most detections related to a single customer are discarded as true negatives in milliseconds. The model also ensures true positives don’t get discarded by mistake. We also make sure to keep retraining Machine Learning models. Machine Learning is only as powerful as its predictive effectiveness, both at a certain time and in the long run. Since there’s no “one-size-fits-all” in algorithms, data scientists must adapt models to the specifics of each new customer and each new use case. As CybelAngel expand scanning and detection capacities into new sources, our Machine Learning models must be retrained to reflect:

Every day, only 70 detections out of a billion are pushed to our Analyst team for further investigation. Most detections related to a single customer are discarded as true negatives in milliseconds. The model also ensures true positives don’t get discarded by mistake. We also make sure to keep retraining Machine Learning models. Machine Learning is only as powerful as its predictive effectiveness, both at a certain time and in the long run. Since there’s no “one-size-fits-all” in algorithms, data scientists must adapt models to the specifics of each new customer and each new use case. As CybelAngel expand scanning and detection capacities into new sources, our Machine Learning models must be retrained to reflect:

- The evolution of the alert feed with the nature of the most recent files detected (size, paths, extensions, content);

- The synergy between the different machine learning models in the pipeline.

Cyber security without Machine Learning is simply not something you can envision today. That’s how you achieve both exhaustivity and actionability.