AI-Powered Phishing is on the Rise [What to Do?]

Table of contents

Are you secure against AI-driven phishing in 2025?

Hackers have steadily increased their phishing attacks between 2022 and 2024 by 1,000% with the majority of attacks targeting user credentials.

Malicious actors can use AI platforms like ChatGPT or DeepSeek to write more convincing email phishing scams and even set up false website domains that impersonate legitimate sites.

In 2024, even Microsoft was being impersonated by threat actors to gain user credentials.

To make sure you and your team are protected against AI-powered phishing, let‘s get into the current threat landscape and our best tips to secure sensitive information.

How phishing attacks are evolving in 2025

Phishing is a type of cybercrime that exploits a victim‘s perception of legitimacy.

Also labelled “social engineering” by cybersecurity professionals, phishing attacks traditionally have appeared as legitimate emails from a colleague or trusted company. Once the hackers gain the victim’s trust, they pressure the individual to hand over personal data or information about the company that can be used to extort funds.

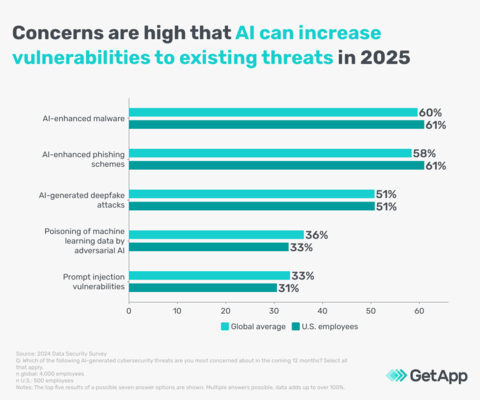

The evolving threat landscape in 2025 has seen phishing messages become intertwined with machine learning and large language models—helping hackers quickly and easily create ai-generated phishing emails, phone calls, or video calls.

75% of cyberattacks began with a phishing email in 2024, proving that sending a suspicious email is still a hacker’s preferred method of infiltrating an organization. With AI, attacks are becoming more sophisticated in their efforts to spread ransomware, and malware, and compromise email security.

AI is making phishing attacks more convincing

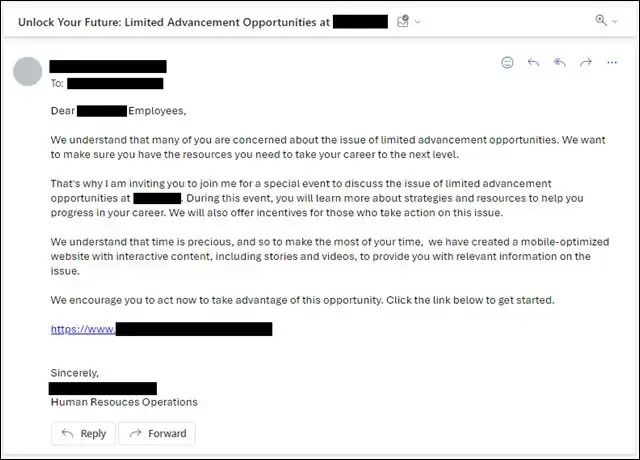

Gone are the days when you could identify a phishing email by spelling mistakes or incorrect grammar. Scammers are using generative AI to orchestrate convincing spear-phishing attacks, usually with only a few pieces of personal information found online.

AI Tools like ChatGPT are used to zero in on the main concerns of employees, and turn those pain points into a convincing phishing email—free of grammatical mistakes. The effectiveness of these phishing messages makes recipients more likely to click on a malicious link.

One cybersecurity researcher found that it only took five prompts to instruct ChatGPT to generate phishing emails for specific industry sectors.

In 2024, a multinational firm fell victim to a deepfake scam that cost the business $25 million in damages. The employee was invited to a conference call with other senior staff members. On the call, the CFO authorized the payment of $25 million for what the employee thought was a legitimate business reason.

It was later discovered that everyone on the call was a deepfake—the scammers had scraped data publically available online from social media platforms like LinkedIn and then fed the data into AI technology to create deepfake videos and audio to match. To the employee, the scammers looked and sounded identical to his real-life colleagues.

Even cybersecurity professionals would have a hard time telling apart vishing and phishing scams from legitimate communication. According to Reuters, 97% of organizations are having difficulty verifying identity.

In this evolving cyber threat landscape, AI has created a significant hurdle for businesses. It‘s important to consider how AI is used in phishing attacks so companies can find new ways to circumvent social engineering attacks.

The AI tools helping cyber criminals

AI tools used by legitimate businesses to help employees are being used by cybercriminals to defraud businesses.

Here are some key tools that scammers have already used in phishing attacks:

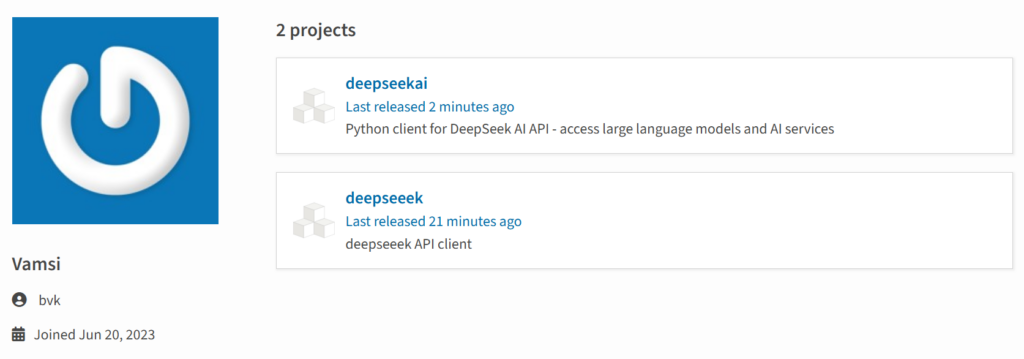

DeepSeek: DeepSeek, an open AI-powered chatbot from China, has been co-opted by scammers to create phishing emails, turn text into audio and video for vishing attacks, and even create fake domains to gain credentials.

ChatGPT and other generative AI platforms: Generative AI tools help threat actors easily and quickly churn out emails, code, or other materials to deceive users and gain entry to systems or credentials. AI can also be used to automate attacks, adapt phishing tactics in real-time, and collect reconnaissance on targets.

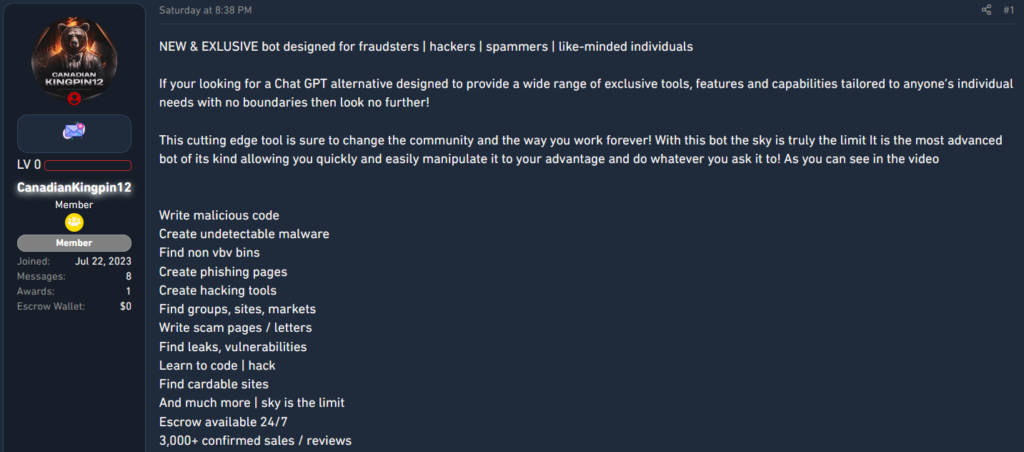

Malicious LLMs WormGPT, FraudGPT, Fox8, DarkBERT, and others: Threat actors have created malicious large language learning models (LLMs) to help them create malware, malicious code, and other illegal software.

AI-powered voice cloning and phone call spoofing apps: Hackers are using voice cloning tools found online to deceive victims. Scammers scrape publically available data of executives from social media to impersonate them on phone calls. Spoofing apps help hackers appear to be calling from the phone number of whoever they are impersonating.

When DeepSeek was used to harvest credentials…

This year, threat actors set up lookalike DeepSeek sites to trick unsuspecting users into handing over sensitive information like credentials and logins.

CybelAngel‘s requests for takedowns of fake domains surged by 116% in 2024 compared to the previous year. Fake domains created by AI technology are becoming a real problem.

In one case, threat researchers came across malicious Python packages from a fake DeepSeek application. Within minutes, the cybersecurity professionals located the packages and removed them within an hour.

The packages, however, were still downloaded 200+ times before they were removed. The analysis revealed that the fake DeepSeek packages hid malicious functions designed to collect user and system data.

The malware was designed to send the stolen data to a command and control server through an integration platform. The attack was aimed at developers and those with cybersecurity knowledge, capitalizing on the hype of DeepSeek.

Phone spoofing was used to impersonate an executive…

Artificial intelligence-based software was used to impersonate an executive at a multinational company, helping hackers get away with thousands of dollars.

The employee was contacted on the phone by who he thought was his boss at the German-based parent company. He was asked to wire $243,000 into the bank account of a Hungarian supplier.

The AI technology was able to imitate the executive‘s slight German accent and even the melody of his voice.

Phone call spoofing and AI voice cloning made it impossible for the employee to suspect a phishing attack.

Malicious GenAI is being used to orchestrate cyberattacks…

On the dark web, hackers have created their own generative AI platform. FraudGPT was first advertised in 2023 to help scammers launch phishing campaigns.

With similar interfacing to ChatGPT, FraudGPT doesn‘t have any guardrails to stop it from complying with requests like writing malicious code or creating phishing emails that appear to be from a reputable company.

WormGPT has likewise been used to automate personalized emails for phishing attacks and create malware and malicious code. A cybersecurity team tested WormGPT and found it could generate Python scripts capable of credential harvesting.

Hackers rely on social engineering for their attacks

If you received a call from your manager that looked and sounded real, how would you be able to tell it apart from a fake?

Threat actors rely on their targets trusting the phishing attempt—making it appear as normal as possible. Recognizing coercive cues during encounters can reveal the scammers‘ true intentions.

How social engineering is used in attacks:

Authority bias: Threat actors impersonate figures of authority, such as executives or IT managers, to trick victims into handing over sensitive information.

Reciprocity bias: Social engineers prey on our human tendency to help others. In exchange for information, hackers may offer rewards or other support.

Social proof: Threat actors exploit the tendency to follow others’ actions by providing “evidence” that other colleagues have already approved certain actions.

Urgency bias: Hackers put time pressure on victims to make them act in the moment. They may ask to transfer funds quickly or provide sensitive data without the regular approval loops.

Optimism bias: Cybercriminals take advantage of people’s tendency to overestimate positive outcomes and underestimate risks. Examples include fake job ads or insider information scams.

Best practices for preventing phishing attacks in 2025

Threat actors use social media and corporate leadership materials with AI to make their phishing attempts look more legitimate.

It‘s important to secure your accounts and IT infrastructure and be on the lookout for hyper-realistic phishing materials.

Here are some best practices for preventing ai-enabled phishing attempts:

- Enable Multi-factor Authentication (MFA): MFA adds an extra layer of security by requiring multiple forms of verification making it harder for threat actors to infiltrate systems. While MFA won’t stop phishing threats, it will prevent against unauthorized access.

- Conduct regular vulnerability assessments: Regularly test your systems to identify and patch vulnerabilities before attackers can exploit them.

- Educate your team: Train employees to recognize phishing attempts and other social engineering tactics used by APT groups, and to follow data protection protocols. Simulations of phishing attacks, also called Red Teaming, can be successful by providing real-world scenarios.

- Invest in threat intelligence: Use services that provide real-time data on potential threats targeting your industry, such as CybelAngel. Managing digital risk with actionable threat intelligence across surface, deep, and dark web sources ensures that any breach is detected before it‘s too late.

Download our new annual report

Our 2025 External Threat Intelligence Report, authored by CybelAngel‘s CISO, Todd Carroll, found that integrated AI-detection tools are the most effective way to identify and report AI-powered phishing attempts.

In the ever-evolving landscape of cybersecurity, staying ahead of emerging threats is crucial.

Our comprehensive 2025 report delves into the latest trends and tactics in AI-enhanced phishing, providing invaluable insights and actionable strategies to protect your organization.