In a Widening Threat Landscape, Deepfakes Stand Out

Table of contents

Thanks to deepfake technology, anyone can create realistic (but false) videos, audio, and images—with potentially devastating consequences.

Cybercriminals can use deepfakes to launch scams, spread misinformation campaigns, and damage reputations. And as the technology becomes more sophisticated, it’s harder to detect deepfakes before it’s too late.

Let’s explore the rising threat and impact of deepfakes, along with real-life deepfake examples across the world. We’ll also show you how to protect your brand from deepfake cybercrime.

1. What are deepfakes in cybersecurity?

Deepfakes are fake media or “synthetic media”—videos, images, or audio that aren’t real—created using artificial intelligence. The technology analyzes real media, before generating a convincing copy, with the same images, voice, and mannerisms.

A deepfake can make it look like someone is saying or doing something. But in reality, it never happened! While in some contexts this can be entertaining, it’s also a growing weapon of choice for cyber attacks.

In cybersecurity, deepfakes are used to deceive and manipulate. For example, malicious actors could use deepfake content for…

- Identity theft: Impersonating people to access and exploit sensitive information, such as through video or voice phishing attacks

- Disinformation campaigns: Manipulating public opinion, such as through news stories or politicians, to drive their own agenda

- Extortion attempts: Using deepfake videos to place people in compromising scenarios, then demanding a payment to avoid it being circulated online

Ofcom explains that deepfake cybercrimes are either designed to ‘demean, defraud, or disinform.’

In the past, it was fairly easy to spot fake content. But now, deepfake technology, such as the DeepFaceLab software, is so sophisticated that it’s harder to recognize cyber threats. This makes deepfakes a rising cybersecurity threat.

2. Is deepfake technology illegal?

Not all deepfakes are harmful. In fact, some deepfakes can have positive uses. For example, filmmakers can use deepfake filters to reshoot scenes without the actors being present.

But when it comes to illegal deepfake activity, many countries don’t have specific laws. Some deepfake crimes can be addressed under wider categories, like fraud, defamation, or cybercrime.

For example, most US states have general criminal impersonation laws. Some states have specific legislation for online impersonation, such as California.

But, the legal ambiguity across the world can make it difficult to hold all creators accountable, especially if the deepfake is shared anonymously or from a country with weaker regulations or threat intelligence.

Plus, regulating deepfake technology is no easy task. It would be challenging for regulators to differentiate between legitimate and malicious deepfakes—let alone identifying them all in the first place.

3. Deepfake cybersecurity trends for 2025

With the increased sophistication of machine learning, deepfake algorithms are becoming more prevalent and harder to spot. Here are some deepfake cybersecurity trends to be aware of.

Deepfakes are on the rise

According to Ofcom, two out of five people have seen at least one deepfake in the last six months. This shows that deepfakes are already widespread online and on social media, creating countless opportunities for cybercriminals to exploit.

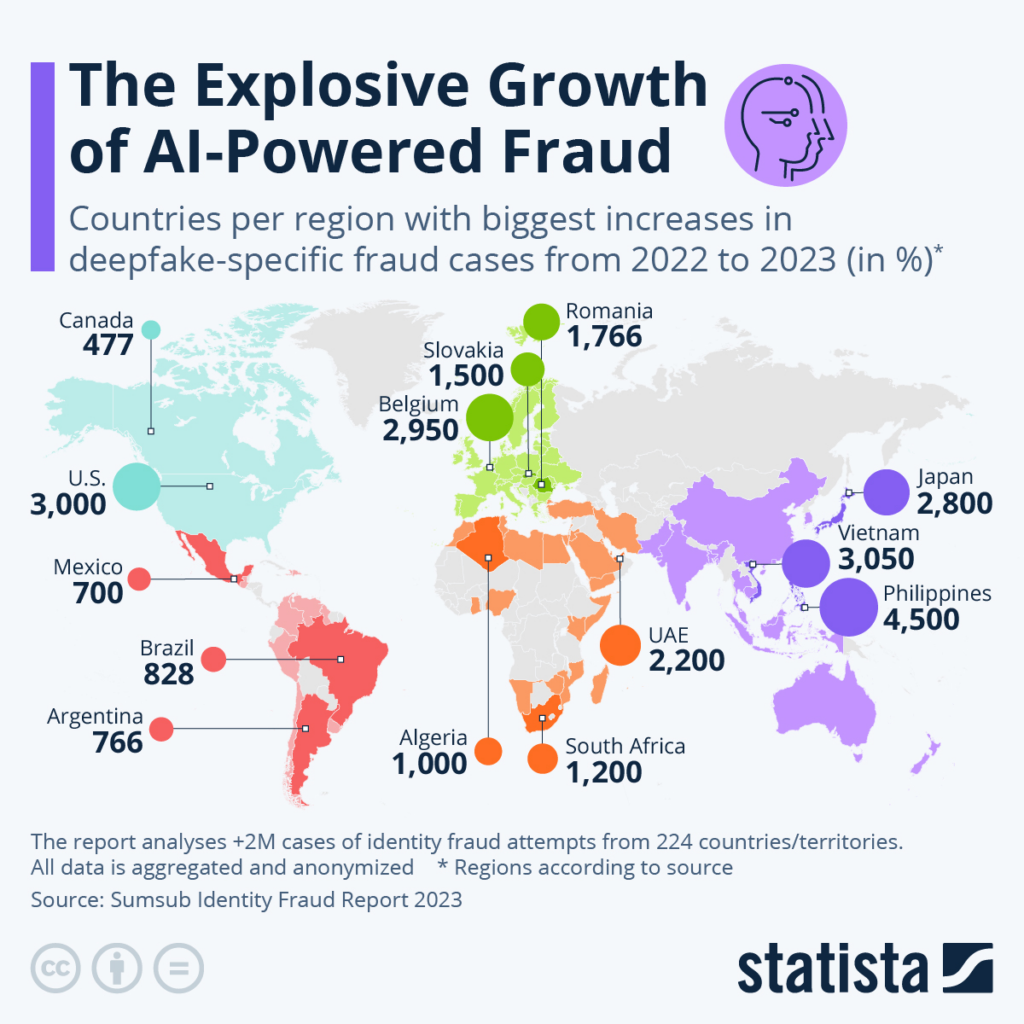

Deepfake frauds are increasing in the US and Asia

In an analysis of deepfake-specific fraud cases, the US and Asia have shown the biggest increase in 2022—2023. Other notable increases include Belgium, along with the United Arab Emirates. But as this map shows, deepfake fraud is a global issue.

Map showing the biggest increases in deepfake fraud by region. Source.

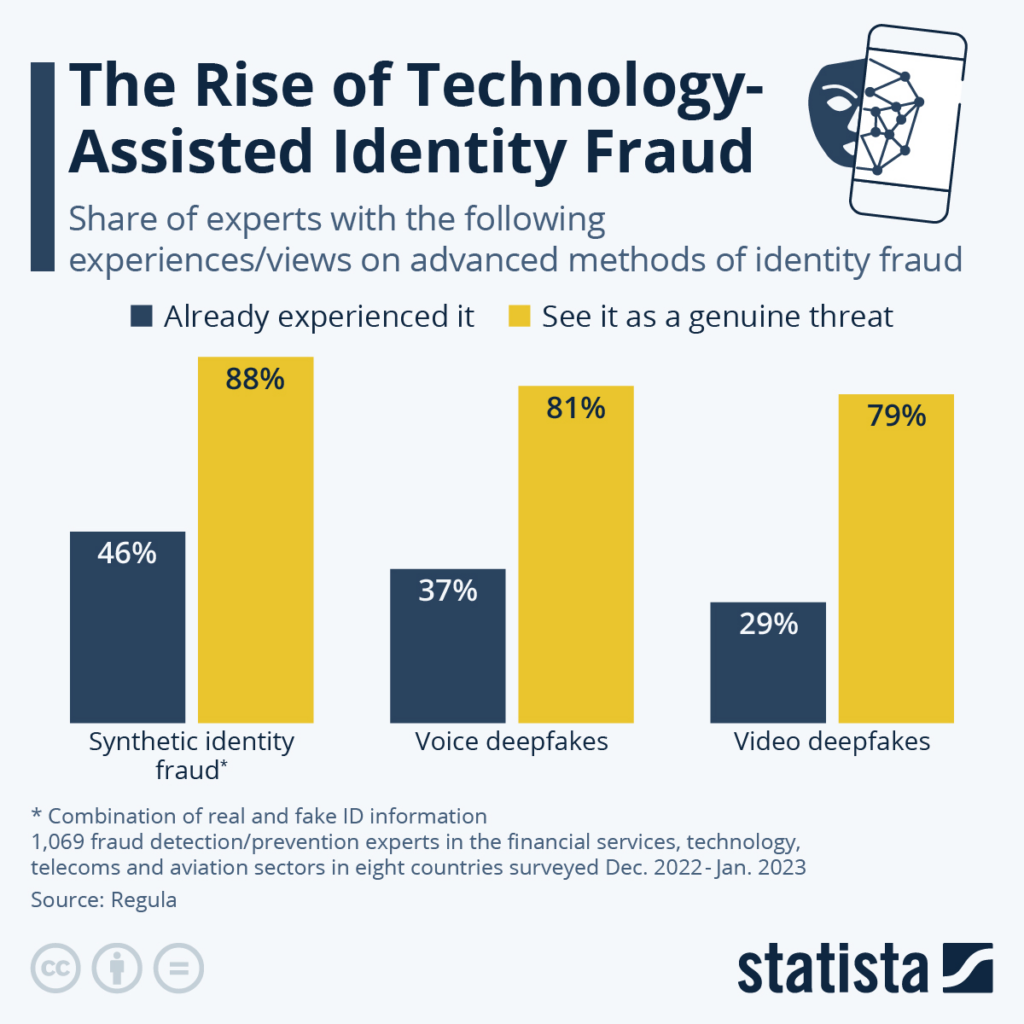

Audio deepfakes may pose more danger than videos

In an expert review of technology-assisted identity fraud, people had either experienced more voice deepfakes or were more concerned about them, compared to videos. However, they felt the greatest danger was synthetic identity fraud, where hackers use a combination of fake and real ID information.

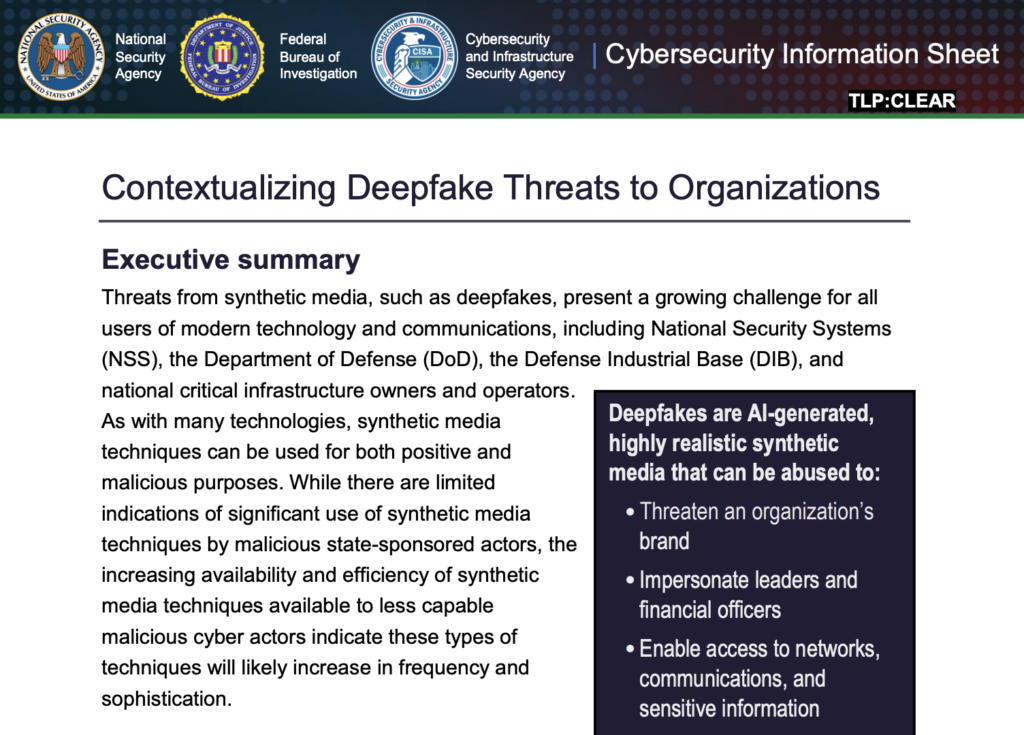

US security regulators are cracking down on deepfakes

The National Security Agency (NSA), the Federal Bureau of Investigation (FBI), and the Cybersecurity and Infrastructure Security Agency (CISA) collaborated last year to release a deepfake information sheet. It features ‘recommended steps and best practices’ to combat the rising trend.

Deepfake information sheet from US regulators. Source.

4. Real-life deepfake attacks: 4 examples

What does it look like when deepfakes are used by cybercriminals? Here are some deepfake attack examples in different regions.

Identity fraud in Africa

After the Middle East, Africa has the highest increase in identity fraud rates. Using deepfakes, criminals can run 100 fraudulent activities per year, and generate over $2.5 million per month in revenue. And deepfake activity is on the rise.

A CEO impersonation at a UK finance firm

Many cybercrimes are motivated by profit, making the financial services sector a prime target for deepfake threats. For example, in 2019, fraudsters used generative AI to mimic a CEO’s voice and demand a transfer of $243,000 to a Hungarian organization.

Video call deepfakes in Hong Kong

When it comes to social engineering techniques, nothing can be more elaborate than a fake video call. Earlier this year, a Hong Kong finance worker paid out $25 million after a deepfake video conference call. The scam featured multiple deepfake creations, showing several different members of staff.

Audio deepfakes in the Middle East

In 2020, a bank manager transferred $35 million at the behest of cybercriminals who were simulating his director’s voice, using artificial intelligence. There have been other reports of people receiving phone calls from loved ones, asking for money—only to find out that they were actually cybercriminals using audio deepfakes.

5. How can we defend against the use of deepfakes for cyber exploitation?

Here are some cybersecurity best practices that any organization use to counter threat actors using deepfakes.

- Invest in brand protection: Safeguard your domains, logos, and social media accounts to avoid being impersonated online. Brand protection services, such as CybelAngel, let you spot potential deepfake threats early on.

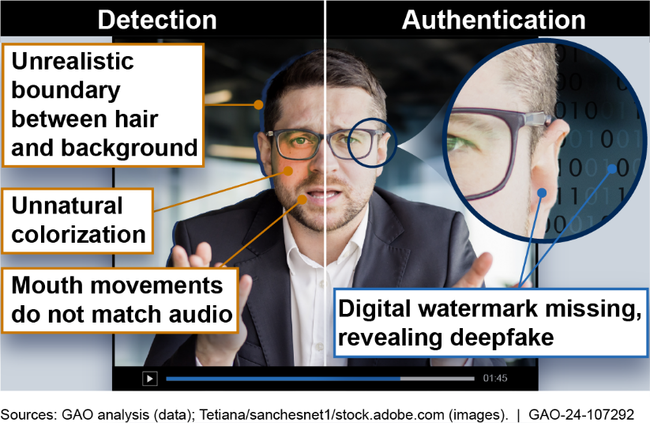

- Get deepfake detection technology: There are plenty of forensic and AI-powered tools that can identify synthetic media. They search for inconsistencies in the image that could indicate deepfake technology.

- Harness authentication tools: Add digital watermarks, metadata, or blockchains to your media so that you can confirm where it comes from. This can help audiences to spot what’s real, and what’s fake.

- Follow secure cybersecurity practices: Set up cybersecurity measures such as firewalls, anti-malware tools, and multi-factor authentication. You can also invest in an EASM tool such as CybelAngel.

- Develop crisis management strategies: Be ready for potential deepfake attacks with a plan on how you’ll communicate, be transparent, and collaborate with cybersecurity experts and law enforcement.

- Collaborate with technology partners: Stay in sync with cybersecurity firms and tech experts specializing in deepfake detection and prevention. This will help you to stay one step ahead of deepfake cybercriminals.

Conclusion

Deepfakes aren’t inherently bad. It’s how they’re used that matters. And unfortunately, cybercriminals will expertly use deepfakes to exploit organizations’ cyber vulnerabilities.

With the right authentication and detection tools in place, along with leading brand protection solutions such as CybelAngel, companies can protect against deepfake threats in real-time and safeguard their business in the long run.